Avoiding the Air Canada Chatbot Fiasco

Why Testing and Evaluating Your AI System is Essential

AI isn’t like traditional software. Historically, when you used a computer, input X would always give you output Y. With AI, you give it input X and get back output "Mostly Y" or "Some of X".

To put it into a real context: Air Canada’s chatbot had so many user questions about its return policy, the Air Canada chatbot hallucinated a new return policies. A Canadian court forced Air Canada to honor the new return policy the chatbot made up on the fly.

How are you solving the problem of knowing if your AI is returning correct results?

Test.

To quote Greg Brockman, President of OpenAI: “Evals are surprisingly often all you need”.

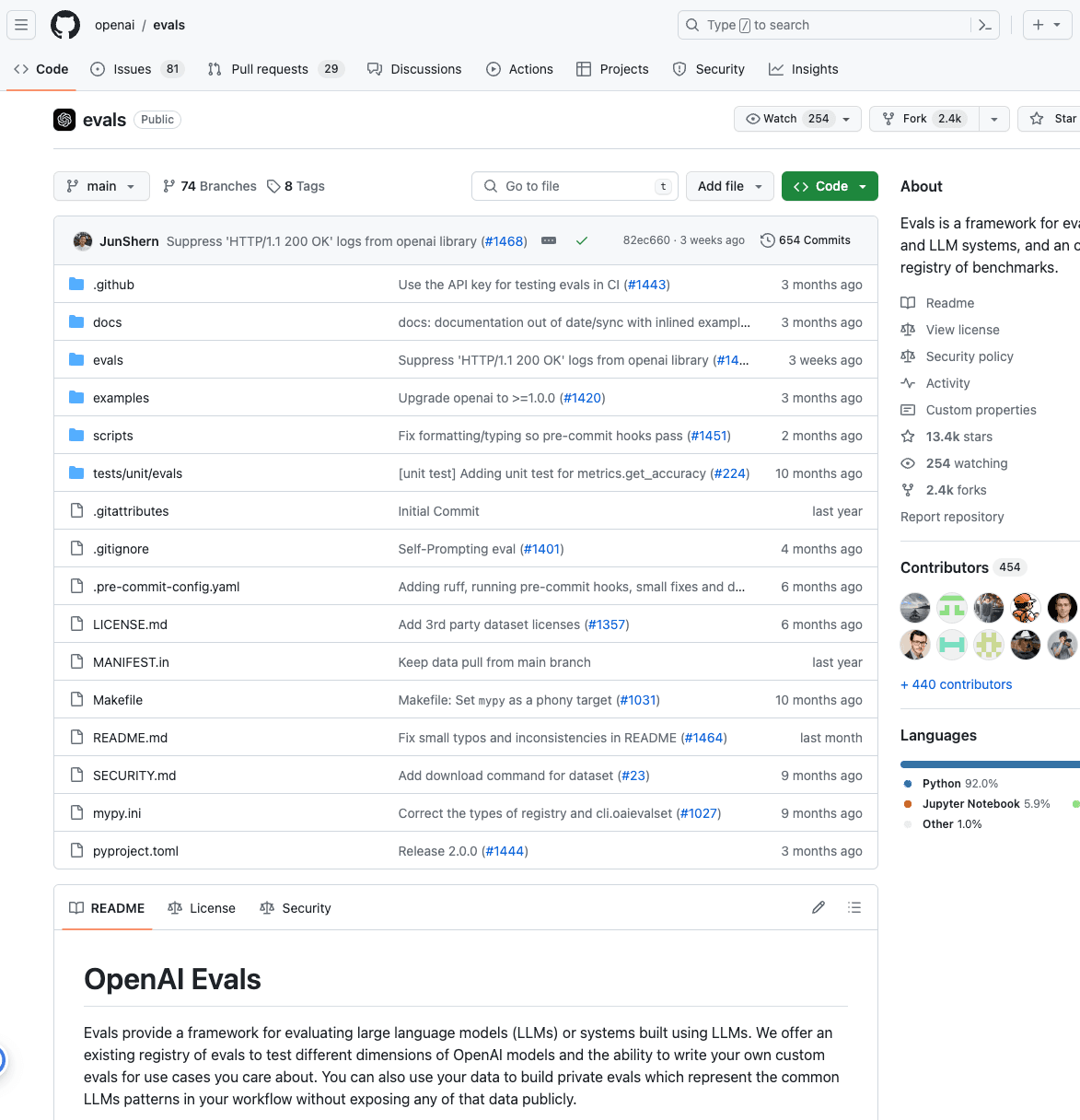

Evals is the testing technique that can provide a score of how good an LLM is. It is so important that OpenAI open-sourced their Evals framework, which they used (in part) to evaluate their LLM. You can download it their Eval Project.

Share this with your tech teams; if they don’t have quality assurance in place for your underlying system, then it’s just a matter of time before something changes and your AI system won’t work.

Share this post with your tech teams, make sure they have this under control.

https://github.com/openai/evals

The "OpenAI Evals" Git project is designed for developers and researchers working with large language models (LLMs). Its important for technical teams for:

Evaluating LLMs: It provides a framework for evaluating LLMs or systems built using LLMs, which is crucial for understanding their performance and behavior.

Quality Assurance: Creating high-quality evals is essential for accurately assessing how different model versions might impact a particular use case.

Privacy: The framework supports the creation of private evals, enabling users to evaluate LLMs on their data without exposing it publicly.

Customization: Users can write their own custom evals for specific use cases, allowing for tailored assessments that align with their unique requirements.